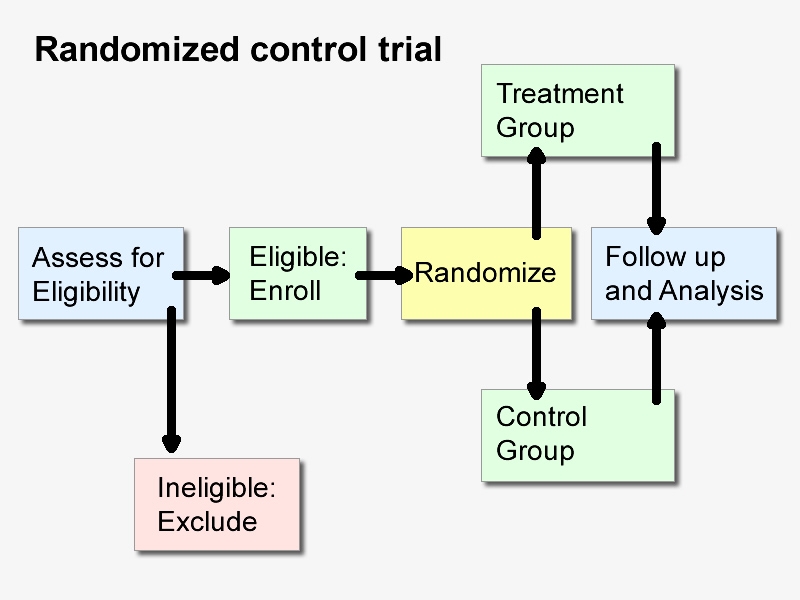

Randomized controlled trials (RCTS) are the gold standard for evaluating the safety and efficacy of a treatment. However, in some cases, RCT is not feasible, so some scholars put forward the method of designing observational studies according to the principle of RCT, that is, through “target experiment simulation”, observational studies are simulated into RCT to improve its validity.

Randomized controlled trials (RCTS) are criteria for evaluating the relative safety and efficacy of medical interventions. Although analyses of observational data from epidemiological studies and medical databases (including electronic medical record [EHR] and medical claims data) have the advantages of large sample sizes, timely access to data, and the ability to assess “real world” effects, these analyses are prone to bias that undermines the strength of the evidence they produce. For a long time, it has been suggested to design observational studies according to the principles of RCT to improve the validity of the findings. There are a number of methodological approaches that attempt to draw causal inferences from observational data, and a growing number of researchers are simulating the design of observational studies to hypothetical RCTS through “target trial simulation.”

The target trial simulation framework requires that the design and analysis of observational studies be consistent with hypothetical RCTS that address the same research question. While this approach provides a structured approach to design, analysis, and reporting that has the potential to improve the quality of observational studies, studies conducted in this way are still prone to bias from multiple sources, including confounding effects from unobserved covariates. Such studies require detailed design elements, analytical methods to address confounding factors, and sensitivity analysis reports.

In studies using the target-trial simulation approach, researchers set a hypothetical RCTS that would ideally be performed to solve a particular research problem, and then set observational study design elements that are consistent with that “target-test” RCTS. Necessary design elements include inclusion of exclusion criteria, participant selection, treatment strategy, treatment assignment, start and end of follow-up, outcome measures, efficacy assessment, and statistical analysis plan (SAP). For example, Dickerman et al. used a target-trial simulation framework and applied EHR data from the U.S. Department of Veterans Affairs (VA) to compare the effectiveness of BNT162b2 and mRNA-1273 vaccines in preventing SARS-CoV-2 infections, hospitalizations, and deaths.

A key to the simulation of a target trial is to set “time zero,” the point in time at which participant eligibility is assessed, treatment is assigned, and follow-up is initiated. In the VA Covid-19 vaccine study, time zero was defined as the date of the first dose of vaccine. Unifying the time to determine eligibility, assign treatment, and start follow-up to time zero reduces important sources of bias, particularly immortal time bias in determining treatment strategies after starting follow-up, and selection bias in starting follow-up after assigning treatment. At VA

In the Covid-19 vaccine study, if participants were assigned to the treatment group for analysis based on when they received the second dose of vaccine, and follow-up was initiated at the time of the first dose of vaccine, there was a non-death time bias; If the treatment group is assigned at the time of the first dose of vaccine and follow-up begins at the time of the second dose of vaccine, selection bias arises because only those who received two doses of vaccine will be included.

Target trial simulations also help avoid situations where the therapeutic effects are not clearly defined, a common difficulty in observational studies. In the VA Covid-19 vaccine study, researchers matched participants based on baseline characteristics and assessed treatment effectiveness based on differences in outcome risk at 24 weeks. This approach explicitly defines efficacy estimates as differences in Covid-19 outcomes between vaccinated populations with balanced baseline features, similar to RCT efficacy estimates for the same problem. As the study authors point out, comparing outcomes of two similar vaccines may be less influenced by confounding factors than comparing outcomes of vaccinated and unvaccinated people.

Even if the elements are successfully aligned with RCTS, the validity of a study using a target-trial simulation framework depends on the selection of assumptions, design and analysis methods, and the quality of the underlying data. Although the validity of RCT results also depends on the quality of the design and analysis, the results of observational studies are also threatened by confounding factors. As non-randomized studies, observational studies are not immune to confounding factors like RCTS, and participants and clinicians are not blind, which may affect outcome assessment and study results. In the VA Covid-19 vaccine study, researchers used a pairing approach to balance the distribution of baseline characteristics of the two groups of participants, including age, sex, ethnicity, and degree of urbanization where they lived. Differences in the distribution of other characteristics, such as occupation, may also be associated with the risk of Covid-19 infection and will be residual confounders.

Many studies using target-trial simulation methods employ “real world data” (RWD), such as EHR data. The benefits of RWD include being timely, scalable, and reflective of treatment patterns in conventional care, but must be weighed against data quality issues, including missing data, inaccurate and inconsistent identification and definition of participant characteristics and outcomes, inconsistent administration of treatment, different frequency of follow-up assessments, and the loss of access due to the transfer of participants between different healthcare systems. The VA study used data from a single EHR, which mitigated our concerns about data inconsistencies. However, incomplete confirmation and documentation of indicators, including comorbidities and outcomes, remains a risk.

Participant selection in analytical samples is often based on retrospective data, which can lead to selection bias by excluding people with missing baseline information. While these problems are not unique to observational studies, they are sources of residual bias that target trial simulations cannot directly address. In addition, observational studies are often not pre-registered, which exacerbates issues such as design sensitivity and publication bias. Because different data sources, designs, and analysis methods can yield very different results, the study design, analysis method, and data source selection basis must be pre-determined.

There are guidelines for conducting and reporting studies using the target trial simulation framework that improve the quality of the study and ensure that the report is detailed enough for the reader to critically evaluate it. First, research protocols and SAP should be prepared in advance before data analysis. SAP should include detailed statistical methods to address bias due to confounders, as well as sensitivity analyses to assess the robustness of the results against major sources of bias such as confounders and missing data.

The title, abstract, and methods sections should make it clear that the study design is an observational study to avoid confusion with RCTS, and should distinguish between observational studies that have been conducted and hypothetical trials that are being attempted to simulate. The researcher should specify quality measures such as the data source, the reliability and validity of the data elements, and, if possible, list other published studies using the data source. The investigator should also provide a table outlining the design elements of the target trial and its observational simulation, as well as a clear indication of when to determine eligibility, initiate follow-up, and assign treatment.

In studies using target trial simulations, where a treatment strategy cannot be determined at baseline (such as studies on the duration of treatment or the use of combination therapies), a resolution to non-death time bias should be described. Researchers should report meaningful sensitivity analyses to assess the robustness of study results to key sources of bias, including quantifying the potential impact of unobtrusive confounders and exploring changes in outcomes when key design elements are otherwise set. The use of negative control outcomes (outcomes strongly unrelated to the exposure of concern) may also help quantify residual bias.

Although observational studies can analyze issues that may not be possible to conduct RCTS and can take advantage of RWD, observational studies also have many potential sources of bias. The target trial simulation framework attempts to address some of these biases, but must be simulated and reported carefully. Because confounders can lead to bias, sensitivity analyses must be performed to assess the robustness of the results against unobserved confounders, and the results must be interpreted to take into account changes in the results when other assumptions are made about the confounders. The target trial simulation framework, if rigorously implemented, can be a useful method for systematically setting observational study designs, but it is not a panacea.

Post time: Nov-30-2024